I started this post in my newsletter last week, but I decided to move it here because of its length. Weeks ago, Timnit Gebru, AI Ethical team co-lead and researcher, ended her collaboration at Google Brain.

As a very summarized version, the story starts when Dr. Timnit Gebru and other colleagues sent a paper for feedback and review, and her manager’s manager, legendary Jeff Dean, and Megan Kacholia (VP of Engineering) orders her to retract it.

After, she sent an unfortunate email asking for help and support to an internal listserv and also asked her higher management to meet some requirements to continue working at the company. Then, she “gets resigned” from Google Brain.

It is a terrible but interesting case, combining ethical issues from diversity and management to company interest.

The best people to answer all these questions are the people involved. In this post, I will only include a wrap up of articles and opinions.

The Way for Getting Her Resigned

There have been lots of discussions about if she resigned or was fired. Or if she was “legally resigned,” which IMHO sounds worse than being fired. The fact is that she was cut off quickly when there wasn’t any real will of leaving the company.

The first step to dive deeper into what happened is to read the email she sent to the Google Brain Women and Allies group, and then Jeff Dean’s communication about the resignation of Timnit. Both emails are available at Platformer.

I think sending that email to the Google Brain Women and Allies group was a bad idea, especially when knowing that management was also reading. The content, and asking people to stop working, is quite irresponsible for a manager or co-lead.

Also, “threatening” the senior management asking them to meet her requirements to continue working at Google wasn’t the best move. But do you find it enough to fire anybody?

In case you want to fire anybody, you do it, and if this person wants to resign, you allow it to resign. But you don’t go saying that this person resigned when not.

Possible Reasons for Firing Her

Dr. Timinit Gebru is a well-known researcher with a wide impact in the field of AI, Ethics, and the community. So, what makes a company decide to fire a highly reputed researcher, a “rockstar” like she was referred to in social media?

I mean, if you have a rockstar in your team, would you kick it after the first problem? And why this hurry? Why this need to do it quickly that led to doing things that bad?

The actions that Google executed are probably the best demonstration of how they could be looking desperately for a reason to fire her. You could read the following Twitter thread from a former Googler and tech exec.

That brought me to think about the reasons that could drive them to do it:

- The paper’s content threatens in any way the company, so there are a real need and hurry in its retraction.

- The work of the AI Ethical team threatens other Googlers, so Google management was thinking of protecting them over ethically doing things.

- They were looking for an excuse to get her out, so her reaction gave them the perfect reason.

- Timnit is a recognized voice in AI, Ethics, and Google, so keeping her after rejecting the paper would be considered toxic for Google Brain management purposes.

In any case, they would be assuming tons of passionate reactions in social media, they would have imposed silence, and they will be waiting until they weather the storm.

Management Issues at Google Brain

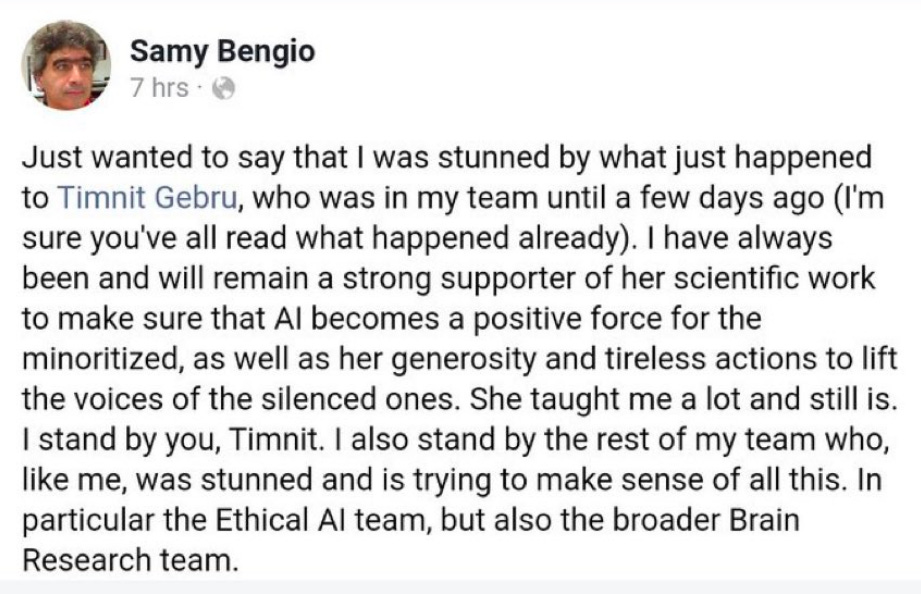

Something that came to my mind is that she had a manager, Samy Bengio, who wasn’t noticed and involved. Even for retracting the paper or telling her that she did wrong with the email, it should have been his direct manager who should have done it.

From a managerial point of view, it only makes sense if they already had the idea of cutting her and wanted to avoid involving Samy Bengio. In any other usual situation, his manager would have been his manager, the only one who would have told the problems with the paper and to talk to her about his email manner.

Jeff Dean sending the email accepting the resignation, and sharing excuses about the deadline denied by other Googlers, discredits him as a manager and colleague. Wasn’t he thinking of the damage he is doing to himself, to his department, and the people working in it?

Only Jeff Dean knows the answer to most of the open questions, but he won’t publish anything else related to this topic, probably because he can’t, and probably he is not the one writing the answers.

It also turns out that the best engineers could not bet the best managers. Jeff Dean is a legend at Google and AI, but maybe he is not the best manager for their people.

I read on Twitter a person defending Jeff, saying that he wasn’t a politician, just an engineer. Sorry, but if you arrive at a VP position, you are.

AI Ethics

I didn’t’ want to lose the opportunity to share with you a brief view of the Ethical challenges in AI, the importance of Ethics in AI, and the relevance that it has: AI has a potential influence to change the world, but the open questions are how and towards where.

The ethics of AI lies in the ethical quality of its prediction, the ethical quality of the end outcomes drawn out of that and the ethical quality of the impact it has on humans.

So the challenge of AI ethics is to avoid any harm caused by their outcome. Probably unintended damages, but when there’s a lack of awareness regarding these issues, there’s the real potential harm that can be caused.

A “harm” is caused when a prediction or end outcome negatively impacts an individual’s ability to establish their rightful personhood (harms of representation), leading to or independently impacting their ability to access resources (harms of allocation).

Even Unesco is asking for policies and regulatory frameworks to ensure that these emerging technologies benefit humanity as a whole. Biased algorithmic systems can lead to unfair outcomes, discrimination, and injustice. There’s a high risk of encapsulating human biases and blind spots.

There’s a fierce debate regarding the interference of ethics in AI research. Some researchers defend their contribution to science as an argument to avoid the censorship of the ethical points of view.

If you’re interested in knowing more about AI ethics, you could visit The Hitchhiker’s Guide to AI Ethics.

The Content of the Paper

Maybe the content of the paper gives some context and helps to understand what happened. MIT Technology Review published an analysis about the content: We read the paper that forced Timnit Gebru out of Google. Here’s what it says.

But Azeem Azhar did great in summarizing MIT’s review in his LinkedIn article The Essential Ethical Quandary of Industry, where he raised the issue of Google’s biased ethical work.

Google says that the work that its Ethical AI team does is important, but it can’t be completed by an in-house team. Self-regulation in this area will not work. It didn’t work with oil companies and climate change. It won’t work with tech firms.

So, on top of that, there’s a conflict of interests. Google is not interested in researching something that could lead them to problems., cannot allow them to work freely, independently, and probably wants an Ethical team without ethics.

Companies and Research Relation

Andrew Ng also raised this open question regarding research and companies’ relation to establish balanced rules to set similar expectations.

So, what goes first? The research or the company? Probably the company who pays, you don’t bite the hand that feeds.

But in case you are not developing any commercial product, and you are working in ethics thinking on a higher purpose, on minorities of end customers, in this case, which one goes first?

Toxicity and Diversity in the Workplace

Considering all that we have already exposed here, there is enough information to understand what happened in this case. But the story is even worse. It really shows big issues about toxicity in the workplace and also raises some problems with diversity.

But as this article shares, Google employees have grumbled in recent years about ethics in artificial intelligence, treatment of women, treatment of Black employees, and transparency. When Google fired her, it touched all four of these issues.

You could also read the following MIT Technology Review article, which shows a terrible story, explaining how it is also well-known in the industry and how far management is going to cover these behaviors.

What about Google’s Answer?

Google CEO Sundar Pichai said that “We need to accept responsibility for the fact that a prominent Black, female leader with immense talent left Google unhappily” and “We need to assess the circumstances that led up to Dr. Gebru’s departure, examining where we could have improved and led a more respectful process”.

Also, trying to discredit her work seems not to be a good idea. Dr. Timinit Gebru is a well-known researcher with a wide impact in the field of AI, Ethics, and the community.

The Best Place to Work

This sounds very weird when you always thought about Google as the place to be, as the best workplace, and it still is in the top ten. Maybe is this toxic behavior located in the Brain department? As Bob Sutton also remembered, “Don’t Be Evil? I fear they stopped using that slogan for a reason.”

And also, you have more details on this platform Standing with Dr. Timnit Gebru, where you could also find a list of supporters. By the time I am writing this post, there are 2695 Googlers and other 4302 signatures.

This firing exposed a problem publicly, not only related to Timnit but for the whole company. There’s a lot of work to do related to AI Ethics, diversity, and people management.

I wish that something good could come to an end in this case, but I don’t think there’s any way back. Right now, it seems to be a lose-lose situation for both Google and Timnit.

I hope you liked it. If so, please share it! Do not hesitate to add your comments. And, if you want to stay up to date, don’t miss my free newsletter, where this post began.

Thanks for reading.

Also published on Medium.

Join the FREE Newsletter

Also published on Medium.